Deploying an OAuth Proxy for Internal Kubernetes Applications

A reverse proxy and static file server that provides authentication using Providers (Google, GitHub, and others) to validate accounts by email, domain or group.

This component acts as a common proxy for authenticating and protecting internal resources of a Kubernetes Cluster through various SSO mechanisms like Google SSO, and can act as a single proxy for all internal applications/ingresses in the cluster. An example use case can be that few ingresses are protected by the Google OAuth screen which will only allow logins from employees of Organization, e.g, having an email of @organization_domain.com.

To implement this proxy, we will be using an Open Source tool called OAuth2 Proxy, which was originally created by bitly, but was later left unmaintained, which then was forked and is currently maintained by an independent organization for the project.

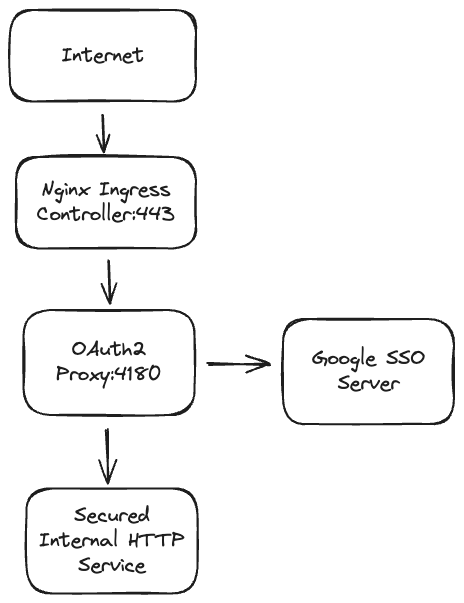

The proxy's architecture inside the cluster will look something like this:

Proxy Deployment

In this tutorial, I will be sharing the steps through which you can deploy a single deployment of the proxy in an isolated namespace, which can be used for all the applications across multiple namespaces. We will be managing everything by Kubernetes YAML files and Kustomize. Kustomize is a template-free way to customize application configuration and kubernetes manifests, and is already baked into kubectl via a -k flag. To make the whole application in kustomize, we will be creating a folder, let's name it oauth2-proxy, and will be creating an empty kustomization.yaml file in there, which will act as our entry point for the whole application.

Deployment File (proxy-dep.yaml)

In the oauth2-proxy folder, create a file named proxy-dep.yaml.

apiVersion: apps/v1

kind: Deployment

metadata:

name: oauth2-proxy

labels:

app: oauth2-proxy

spec:

replicas: 1

selector:

matchLabels:

name: oauth2-proxy

template:

metadata:

labels:

name: oauth2-proxy

spec:

volumes:

- name: oauth2-proxy-config

configMap:

name: oauth2-proxy-config

containers:

- image: quay.io/pusher/oauth2_proxy:latest

name: oauth2-proxy

ports:

- containerPort: 4180

volumeMounts:

- name: oauth2-proxy-config

mountPath: /etc/oauth2-proxy.cfg

subPath: oauth2-proxy.cfg

args:

- --config=/etc/oauth2-proxy.cfg

You can copy paste this file directly if you want, but I will still explain important bits of this file for further clarity.

- Here we are using

quay.io/pusher/oauth2_proxy:latestas our image here, which is the official docker image provided by the Organization. For compliance reasons, you may want to to have this container in your registry. In that case, you can clone the official repository, build your Docker image from there, and push to your private container registry. Then you can use theimagePullSecretsproperty of the Pod spec to specify the credentials of your registry. - We are mounting a volume named

oauth2-proxy-config, which will be coming from aConfigMap, which we are passing inside the image as an arg, and acts as a configuration file containing our SSO information. We will be creating theConfigMapin the next step.

ConfigMap (configMap.yaml)

The ConfigMap used to store the configuration for the proxy will look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: oauth2-proxy-config

data:

oauth2-proxy.cfg: |-

provider="google"

provider_display_name="Google"

client_id=<GOOGLE_CLIENT_ID>

client_secret=<GOOGLE_CLIENT_SECRET>

cookie_secret=<BASE64_SECRET>

http_address="0.0.0.0:4180"

upstreams="file:///dev/null"

email_domains=["domain.com"]

cookie_domains=[".internal.domain.com"]

whitelist_domains=[".internal.domain.com"]

session_store_type="redis"

redis_connection_url="redis://redis-service:6379"

Some important properties in this config are:

provider- SSO Provider we are using, e.g., we are using Google OAuth hereclient_id- Client ID of the SSO Providerclient_secret- Client Secret of the SSO Providercookie_secret- A Random base64 string generated to verify the cookie stored in the browser. You can generate it by using openssl.

openssl rand -base64 32 | tr -- '+/' '-_'

email_domains- Top Level Domain (TLD) of the person trying to sign in, which you want to have access. Ideally you will have your organization domain here.cookie_domains- Domain property of the cookie you want to set. OAuth2 proxy manages a server side cookie in the browser to maintain the authentication information of the user. That cookie can be reused across multiple subdomains if we add a.in front of the subdomain. For example, if we set the cookie domain as.sub.domain.com, any domain under this subdomain, likedashboard.sub.domain.comwill automatically have the cookie and can be authorized automatically by the proxy, even if they have logged in to SSO on a different subdomain. Ideally you want to have a specific subdomain for all this internal to the organization, likeinternal.domain.com, and you can set thecookie_domainsvalue to.internal.domain.com.whitelist_domains- This works exactly like thecookie_domains, and is used by the proxy internally to decide which domain is allowed to be proxied.session_store_type- OAuth2 proxy can have a unencrypted session management through the cookie only, or can have a encrypted session management using a small redis database. Ideally, Redis is recommended. You can read more about it here.redis_connection_url- Connection URI to the redis database. We will be deploying a small redis database in the namespace as well in the next step for this.

Apart from Google, there are also multiple SSO providers supported by the project. You can read the configuration information related to them here.

Redis Deployment (redis-dep.yaml)

For a secure session management in the proxy, we can also deploy a small redis instance alongside the proxy deployment. The code for Redis deployment looks like:

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

spec:

selector:

matchLabels:

app: redis

replicas: 1

template:

metadata:

labels:

app: redis

spec:

containers:

- name: redis

image: redis:latest

ports:

- containerPort: 6379

resources:

limits:

cpu: "0.5"

memory: "512Mi"

command: ["redis-server"]

args: ["--save", "", "--appendonly", "no"]

Creating services for OAuth2 Proxy and Redis

Once deployments for both proxy and redis are done and applied, to use them, we will have to expose them as services.

We can do that by creating a proxy-svc.yaml file for proxy service:

apiVersion: v1

kind: Service

metadata:

name: oauth2-proxy

labels:

app: oauth2-proxy

spec:

ports:

- name: http

port: 4180

protocol: TCP

targetPort: 4180

selector:

name: oauth2-proxy

And similarly for redis, we can create a redis-svc.yaml file:

apiVersion: v1

kind: Service

metadata:

name: redis-service

spec:

selector:

app: redis

ports:

- protocol: TCP

port: 6379

targetPort: 6379

Once deployed, redis://redis-service:6379 can be used as a redis connection url for the OAuth Proxy. Since we are not exposing the service to the outer world and using internal namespace networking to connect to the instance, we don't need any authentication for redis here.

Ingress

Finally, we will have to expose our OAuth2 proxy on a public domain so that it can be used by various services inside or outside the cluster.

You should choose a generic domain for the proxy, something like employee-internal.company.com, as all internal services can use the same domain as a proxy URL to authenticate for internal resources.

If you already have an Ingress Controller and Cert Manager setup, you just need to add your Load Balancer's IP as a DNS entry for the domain, and rest will be taken care by 1 ingress file.

The ingress for the proxy will look like this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: oauth2-proxy

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/issuer: letsencrypt-nginx

spec:

ingressClassName: nginx

tls:

- hosts:

- employee-internal.company.com

secretName: oauth2-proxy-cert

rules:

- host: employee-internal.company.com

http:

paths:

- path: /oauth2

pathType: Prefix

backend:

service:

name: oauth2-proxy

port:

number: 4180

Using the Proxy

Once deployed and live, If you are using the open source Nginx Ingress Controller, this Proxy can be used in any other Kubernetes ingress file by giving it two simple annotations:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ...

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/auth-signin: https://employee-internal.company.com/oauth2/start?rd=https%3A%2F%2F$host

nginx.ingress.kubernetes.io/auth-url: https://employee-internal.company.com/oauth2/auth

....

spec:

...

I will be adding a blog soon on Ingress Controllers, Nginx Ingress Controller and how to setup and use Cert Manager to automatically manage SSL certificates for Ingresses in Kubernetes.

Thank you for reaching at the end of the post. If you liked it, please share it among your network. If you found any errors/discrepenceies, you can contact me any time on my mail, and I'll get back to you.